Decade of Inauthenticity?

When everything looks perfect and alike, how to spot what's still genuine and "real"? Why does it matter?

🎉 🚀 ✅ 👍

bla bla bla bla

🤗 ➡️ 🙏 🥳

Looks familiar?

It’s all what’s left registering in my brain when going over my LinkedIn feed these days. A few emoticons + some noise in text or visual form with lots of nuisance comments (as if a bot would be writing it).

We got used to this after years of social media and the professional rise of influencers, streamers and content creators that came with it. However, now that AI has “ingested” this way of writing and communicating, it’s becoming its own standard of expression, exhibiting a lustre lack in style leading to a big gap in human authenticity.

Lazy entrepreneurs, investors and career buddying executives in the masses are taking to ChatGPT (and the likes) to appear what they’re afraid of not being in the first place — genuine authentic versions of themselves, with all the bells and whistles of imperfection and lack of polish that we all have under the hood somewhere.

I expected this to happen in a few years, a decade perhaps. But not now.

We had already acquired the dangerous like of sheer convenience and now we are topping it up it with a taste of laziness and obedience. In business I now see an army of walking zombies having an invisible stamp on their forehead saying:

“I don’t know how to do this, but AI can help me to look like a better version of myself.”

Why not acknowledge the real problem first and BECOME a better version of yourself? Surely that would be far more sustainable, wouldn’t it?

The Problem with Same-Sameness

Over the last months I’ve had my fair share of dealing with supposedly visionary outlines and strategic business plans, pitch decks, social media posts, research articles and even simple storytelling — all created by the same sameness: AI.

I find it boring, numbing, and, quite frankly a bit like these professors do — insulting:

“Humans may be the only species smart enough to build technology to replace themselves, yet dumb enough to simultaneously celebrate the mass exodus of intelligence that comes with it.”

Originality goes out of the window, authenticity becomes a veblen good (yes, you may have to look that up, as an AI would never use this term lightly — it could challenge too many readers making it less likely to be successful. I’m willing to take that risk).

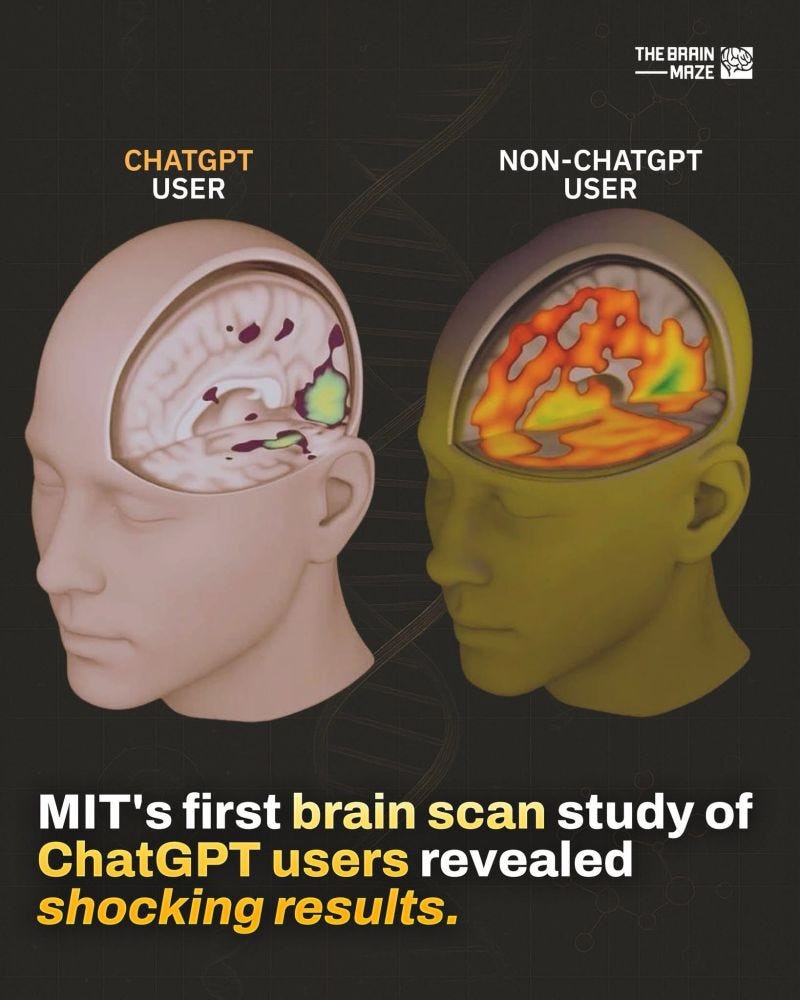

There is so much evidence now that heavy users of AI tools are actually becoming dumb and dumber. Don’t believe me? Just see what Neuroscientist Dr Farhan Khawaja has to say about it:

When confronted with some of these arguments, runaway excusers types typically take the same short cuts as elsewhere in life. To them it feels like it’s the only smart move they must make now in order to successfully upgrade their lives and careers.

Unfortunately what they don’t realize is that this approach makes them completely dependent1 and could remain the last ability they’ll have left, as once they’ve begun outsourcing their creative juices to AI, they’re on the hook forever more. No different than a drug addict or a startup only surviving on more funds raised.

The best excuse I’ve heard?

AI stands for “Augmented” intelligence. “I only use it to augment my own abilities and get more done, more productively”.

Don’t get me wrong, I’m all for that and have been at the forefront of this development since 1994, when I first used ViaVoice solutions to empower my computer and phone systems with voice enabled command prompts and speech to text recognition.

Outsourcing our creativity (e.g. individual input prompts) to fundamentally end up with the same assimilated and computed AI output eventually could turn out to be a recipe for disaster.

Avoid the AI Entanglement-Trap

I believe there are many people, maybe just like me, who are deeply entrenched in the development of AI for decades, who have entangled with its technology-driven abilities and who slowly fathom (albeit not wanting to acknowledge the fact) that without realizing the dependencies2 we’ve created for ourselves in the process, we may one day wake up in a very different world that we originally set out to create with said innovation.

Terms like “ethical” or “responsible” AI really don’t solve the problem here. AI doesn’t turn a profit. The energy consumption to run all this computing power is far greater than the 12W-20W that are required to power our biological brain with billions of interconnected neurons, super charged by intergenerational learnings, habitual memories combined with the ability to tap into nearly unlimited storage.

Why don’t we focus our attention on how to better use a larger percentage of that brain we already have first and then see how much of the (still helpful) AI we really need?

What if one day access to certain AI tools is dependent on your financial capacity to afford it, your social credit to access it or a privileged role in society or the government to even be eligible in the first place?

A generation or two on, what would then have happened to our innate abilities and intelligence that we had previously internalized over theoretically aeons of time?

Are we really prepared to give up before the war has even started?

I believe there is a solution.

Become an Authenticity Detector

Just like a lot of people who develop a “BS Detector” in their respective working domain and become really good at it (a parent who knows when a kid is fooling them, a doctor who knows when the patient isn’t playing along, a banker who senses the actual financial viability of a venture despite the very differently sounding story, etc.), we develop an “Authenticity Detector” as part of our human nature.

In fact, at a subconscious level at least, this movement has probably already started and it’s just a matter of time until more people wake up to it. And NO, it does NOT require yet another technology (or “algorithm”) to detect (and thereby deem) what is authentic and what’s not. Humans KNOW. It’s just that most people used to AI now simply don’t pay enough attention yet to the underlying intention, effort and feeling someone puts into the words carrying the information.

When indeed human energy was utilized in the process of being truly creative, I personally came to notice that it’s far easier and natural for me to follow such text, voice, video or narrative, than if done by the output of an AI — no matter how good the prompts done by AI’s accomplice.

Once more people start to wake up to this fact and consciously embed its nature into their innate 6th sense abilities, there is a renaissance of human intelligence and creativity, waiting to be reborn. Then (who knows) if we could pass such skill on over generations, it’s game over for the more evil forms of AI and its human assistance trying to force its control over the masses.

I’m afraid there will then be a vast group of people who will eventually have to make a tough call for themselves: remain in such a convenient Matrix without a say or wake up to fight another day.

The Power of Intent

Breaking out of a present or future AI prison ain’t gonna be easy. Many heavy AI users already got so used to overestimate their abilities, ignoring AI’s limitations, that they’re blind to their own ignorance and AI’s arrogance:

A new study revealed that when interacting with AI tools like ChatGPT, everyone—regardless of skill level—overestimates their performance. Researchers found that the usual Dunning-Kruger Effect disappears, and instead, AI-literate users show even greater overconfidence in their abilities.

The study suggests that reliance on AI encourages “cognitive offloading,” where users trust the system’s output without reflection or double-checking. Experts say AI literacy alone isn’t enough; people need platforms that foster metacognition and critical thinking to recognize when they might be wrong.3

Above all what people need in future isn’t more “platforms” or “technology”, it’s more “critical thinking”. But that has to start with the Power of Intention and the question of “What do we really want”?

By changing our intention e.g. from “I need to quickly rise in the ranks by looking good” to “I need to become the best (self-reliant) version of myself”, we become stronger and claim independence in a world that’s littered with dependencies and control.

By writing and expressing ourselves with a clear motif, mission and purpose, we speak to the hearts and minds of people, even if the masses of AI-powered bots and fake profiles do not give us the likes, shares and comments we would otherwise deserve.

Deep intention goes a long way but it also needs time. It can be the means to a space mission or the starting point of a revolution.

I’ve put a decent amount of energy and intention in this article, purposefully not using AI prompts. Let’s see how you react to it.

Have great start in the week,

Toby

Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task.

arXiv: Related study pre-print PDF (not yet peer reviewed).

Very true. It's an interesting idea that humans are the only species, smart enough and yet stupid enough to invent technology that can replace them.

What your talking about has happened many times, not many can thatch a house, read a map, plough a field etc.

The key, I imagine, is to decide what skills are worth keeping and what are part of human evolution?

This is a great summary of the dynamics and reflections on where things are heading. I’m a big fan of the action-reaction mental-model of the world and social progress. GenAI/LLM AI applications are causing a lot of new actions, yet there will be a reaction to those changes, and new actions, reactions, etc and a continued back and forth as people figure out what range of trade offs they are comfortable with.

I see this most obviously in business. People are increasingly biased towards face to face discussions with filtered / orchestrated groups of people because telemarketing, LinkedIn and email are increasingly “hyper personalised nonsense”.

People will become increasingly apt at figuring out what is honest and what is engineered but also on evaluating credibility beyond first impressions. There was a time when you could build a relationship via text because well thought out and context aware messages were hard. Now that it’s easier, the line moves.

I also wonder how much of this is because it’s still possible to capture some alpha by faking it with AI. Yet it also seems that window is closing quickly. Once there isn’t any “easy” money through slacking off with AI, people will be forced to find other ways to differentiate.

Thank you for sharing! So many nice ideas to consider.